This blog post is a version of a talk given at the 2018 ACM Computer Supported Cooperative Work and Social Computing (CSCW) Conference based on a paper written by Richmond Wong, Deirdre Mulligan, Ellen Van Wyk, John Chuang, and James Pierce, entitled Eliciting Values Reflections by Engaging Privacy Futures Using Design Workbooks, which was honored with a best paper award. Find out more on our project page, our summary blog post, or download the paper: [PDF link] [ACM link]

In the work described in our paper, we created a set of conceptual speculative designs to explore privacy issues around emerging biosensing technologies, technologies that sense human bodies. We then used these designs to help elicit discussions about privacy with students training to be technologists. We argue that this approach can be useful for Values in Design and Privacy by Design research and practice.

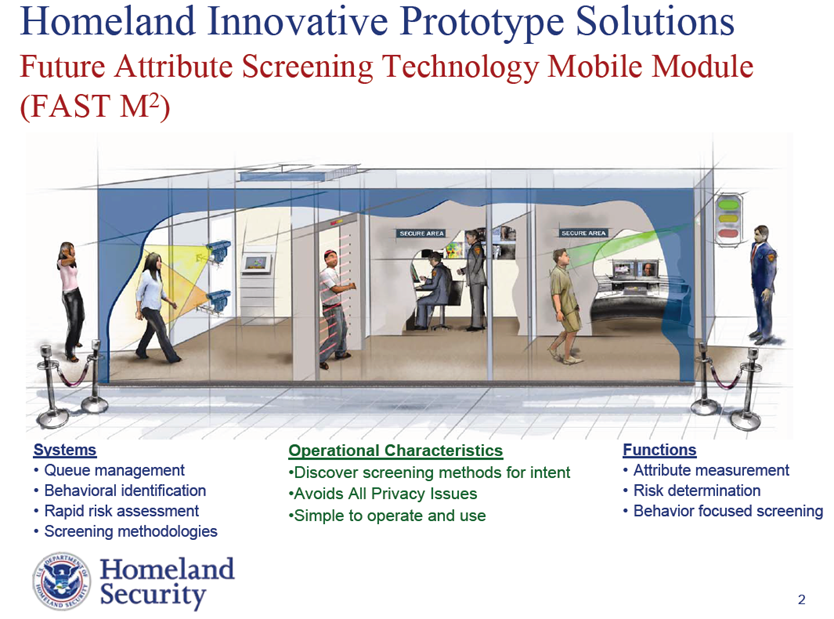

Let me start with a motivating example, which I’ve discussed in previous talks. In 2007, the US Department of Homeland Security proposed a program to try to predict criminal behavior in advance of the crime itself –using thermal sensing, computer vision, eye tracking, gait sensing, and other physiological signals. And supposedly it would “avoid all privacy issues.” But it seems pretty clear that privacy was not fully thought through in this project. Now Homeland Security projects actually do go through privacy impact assessments and I would guess that in this case, they would probably go through the impact assessment process, find that the system doesn’t store the biosensed data, so privacy is protected. But while this might address one conception of privacy related to storing data, there are other conceptions of privacy at play. There are still questions here about consent and movement in public space, about data use and collection, or about fairness and privacy from algorithmic bias.

While that particular imagined future hasn’t come to fruition; a lot of these types of sensors are now becoming available as consumer devices, used in applications ranging from health and quantified self, to interpersonal interactions, to tracking and monitoring. And it often seems like privacy isn’t fully thought through before new sensing devices and services are publicly announced or released.

A lot of existing privacy approaches, like privacy impact assessments, are deductive, checklist-based, or assume that privacy problems already known and well-defined in advance which often isn’t the case. Furthermore, the term “design” in discussions of Privacy by Design, is often seen as a way of providing solutions to problems identified by law, rather than viewing design as a generative set of practices useful to understanding what privacy issues might need to be considered in the first place. We argue that speculative design-inspired approaches can help explore and define problem spaces of privacy in inductive, situated, and contextual ways.

Design and Research Approach

We created a design workbook of speculative designs. Workbooks are collections of conceptual designs drawn together to allow designers to explore and reflect on a design space. Speculative design is a practice of using design to ask social questions, by creating conceptual designs or artifacts that help create or suggest a fictional world. We can create speculative designs explore different configurations of the world, imagine and understand possible alternative futures, which helps us think through issues that have relevance in the present. So rather than start with trying to find design solutions for privacy, we wanted to use design workbooks and speculative designs together to create a collection of designs to help us explore the what problem space of privacy might look like with emerging biosensing technologies.

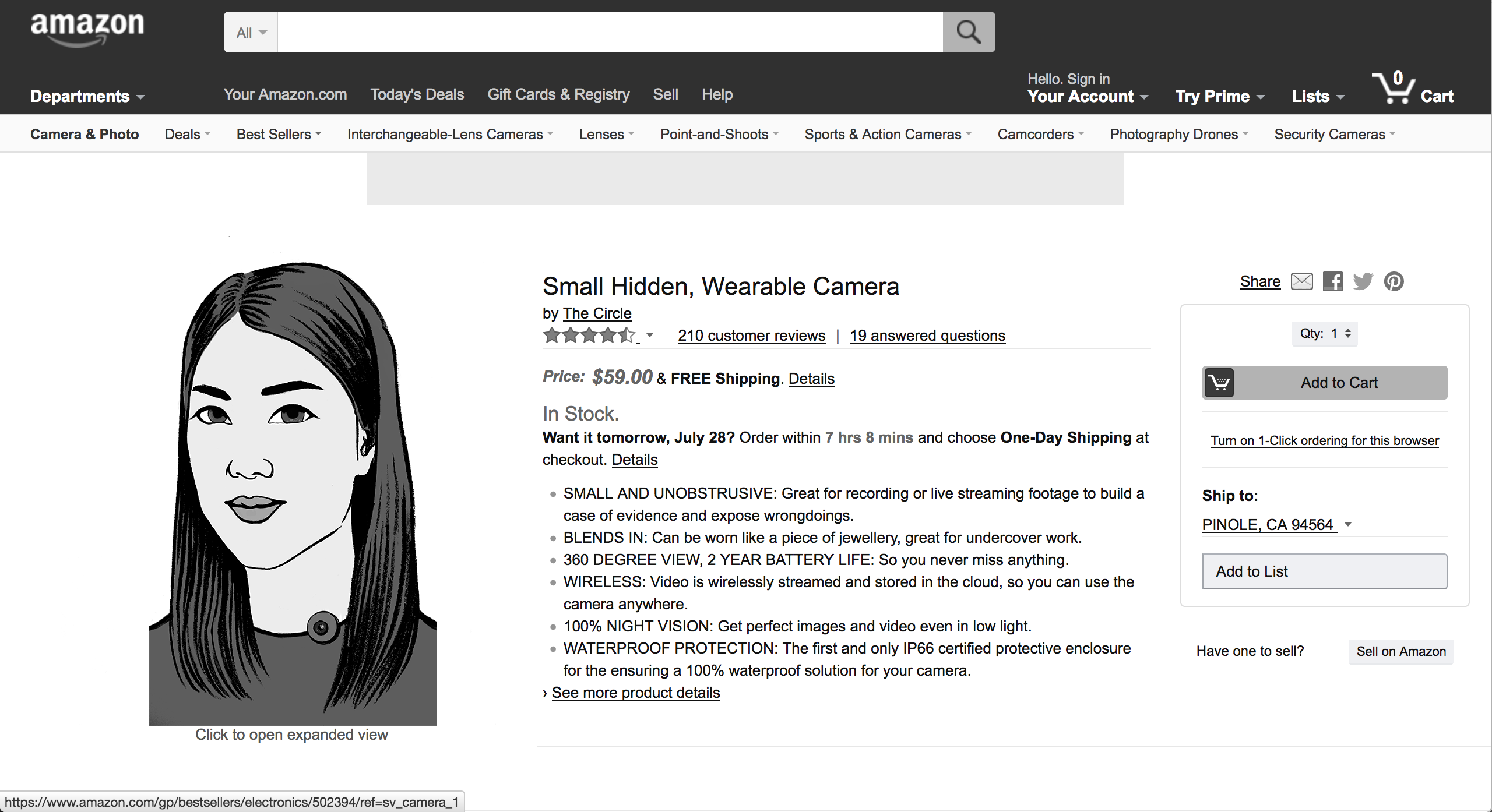

In our prior work, we created a design workbook to do this exploration and reflection. Inspired by recent research, science fiction, and trends from the technology industry, we created a couple dozen fictional products, interfaces, and webpages of biosensing technologies. These included smart camera enabled neighborhood watch systems, advanced surveillance systems, implantable tracking devices, and non-contact remote sensors that detect people’s heartrates. This process is documented in a paper from Designing Interactive Systems. These were created as part of a self-reflective exercise, for us as design researchers to explore the problem space of privacy. However, we wanted to know how non-researchers, particularly technology practitioners might discuss privacy in relation to these conceptual designs.

A note on how we’re approaching privacy and values. Following other values in design work and privacy research, we want to avoid providing a single universalizing definition of privacy as a social value. We recognize privacy as inherently multiple – something that is situated and differs within different contexts and situations.

Our goal was to use our workbook as a way to elicit values reflections and discussion about privacy from our participants – rather than looking for “stakeholder values” to generate design requirements for privacy solutions. In other words, we were interested in how technologists-in-training would use privacy and other values to make sense of the designs.

Growing regulatory calls for “Privacy by Design” suggest that privacy should be embedded into all aspects of the design process, and at least partially done by designers and engineers. Because of this, the ability for technology professionals to surface, discuss, and address privacy and related values is vital. We wanted to know how people training for those jobs might use privacy to discuss their reactions to these designs. We conducted an interview study, recruiting 10 graduate students from a West Coast US University who are training to go into technology professions, most of whom had prior tech industry experience via prior jobs or internships. At the start of the interview, we gave them a physical copy of the designs and explained that the designs were conceptual, but didn’t tell them that the designs were initially made to think about privacy issues. In the following slides, I’ll show a few examples of the speculative design concepts we showed – you can see more of them in the paper. And then I’ll discuss the ways in which participants used values to make sense of or react to some of the designs.

Design examples

This design depicts an imagined surveillance system for public spaces like airports that automatically assigns threat statuses to people by color-coding them. We intentionally left it ambiguous how the design makes its color-coding determinations to try to invite questions about how the system classifies people.

In our designs, we also began to iterate on ideas relating to tracking implants, and different types of social contexts they could be used in. Here’s a scenario advertising a workplace implantable tracking device called TruWork. Employers can subscribe to the service and make their employees implant these devices to keep track of their whereabouts and work activities to improve efficiency.

We also re-imagined the implant as “coupletrack,” an implantable tracking chip for couples to use, as shown in this infographic.

Findings

We found that participants centered values in their discussions when looking at the designs – predominantly privacy, but also related values such as trust, fairness, security, and due process. We found eight themes of how participants interacted with the designs in ways that surfaced discussion of values, but I’ll highlight three here: Imagining the designs as real; seeing one’s self as multiple users; and seeing one’s self as a technology professional. The rest are discussed in more detail in the paper.

Imagining the Designs as Real

Even though participants were aware that the designs were imagined, Some participants imagined the designs as seemingly real by thinking about long term effects in the fictional world of the design. This design (pictured above) is an easily hideable, wearable, live streaming HD camera. One participant imagined what could happen to social norms if these became widely adopted, saying “If anyone can do it, then the definition of wrong-doing would be questioned, would be scrutinized.” He suggests that previously unmonitored activities would become open for surveillance and tracking like “are the nannies picking up my children at the right time or not? The definition of wrong-doing will be challenged”. Participants became actively involved fleshing out and creating the worlds in which these designs might exist. This reflection is also interesting, because it begins to consider some secondary implications of widespread adoption, highlighting potential changes in social norms with increasing data collection.

Seeing One’s Self as Multiple Users

Second, participants took multiple user subject positions in relation to the designs. One participant read the webpage for TruWork and laughed at the design’s claim to create a “happier, more efficient workplace,” saying, “This is again, positioned to the person who would be doing the tracking, not the person who would be tracked.” She notes that the website is really aimed at the employer. She then imagines herself as an employee using the system, saying:

If I called in sick to work, it shouldn’t actually matter if I’m really sick. […] There’s lots of reasons why I might not wanna say, “This is why I’m not coming to work.” The idea that someone can check up on what I said—it’s not fair.

This participant put herself in both the viewpoint of an employer using the system and as an employee using the system, bringing up issues of workplace surveillance and fairness. This allowed participants to see values implications of the designs from different subject positions or stakeholder viewpoints.

Seeing One’s Self as a Technology Professional

Third, participants also looked at the designs through the lens of being a technology practitioner, relating the designs to their own professional practices. Looking at the design that automatically flags and detects supposedly suspicious people, one participant reflected on his self-identification as a data scientist and the values implications of predicting criminal behavior with data when he said:

the creepy thing, the bad thing is, like—and I am a data scientist, so it’s probably bad for me too, but—the data science is predicting, like Minority Report… [and then half-jokingly says] …Basically, you don’t hire data scientists.

Here he began to reflect on how his practices as data scientist might be implicated in this product’s creepiness – that a his initial propensity to want to use the data to predict if subjects are criminals or not might not be a good way to approach this problem and have implications for due process.

Another participant compared the CoupleTrack design to a project he was working on. He said:

[CoupleTrack] is very similar to our idea. […] except ours is not embedded in your skin. It’s like an IOT charm which people [in relationships] carry around. […] It’s voluntary, and that makes all the difference. You can choose to keep it or not to keep it.

In comparing the fictional CoupleTrack product to the product he’s working on in his own technical practice, the value of consent, and how one might revoke consent, became very clear to this participant. Again, we thought it was compelling that the designs led some participants to begin reflecting on the privacy implications in their own technical practices.

Reflections and Takeaways

Given the workbooks’ ability to help elicit reflections on and discussion of privacy in multiple ways, we see this approach as useful for future Values in Design and Privacy by Design work.

The speculative workbooks helped open up discussions about values, similar to some of what Katie Shilton identifies as “values levers,” activities that foreground values, and cause them to be viewed as relevant and useful to design. Participants’ seeing themselves as users to reflect on privacy harms is similar to prior work showing how self-testing can lead to discussion of values. Participants looking at the designs from multiple subject positions evokes value sensitive design’s foregrounding of multiple stakeholder perspectives. Participants reflected on the designs both from stakeholder subject positions and through the lenses of their professional practices as technology practitioners in training.

While Shilton identifies a range of people who might surface values discussions, we see the workbook as an actor to help surface values discussions. By depicting some provocative designs that raised some visceral and affective reactions, the workbooks brought attention to questions about potential sociotechnical configurations of biosensing technologies. Future values in design work might consider creating and sharing speculative design workbooks for eliciting values reflections with experts and technology practitioners.

More specifically, with this project’s focus on privacy, we think that this approach might be useful for “Privacy by Design”, particularly for technologists trying to surface discussions about the nature of the privacy problem at play for an emerging technology. We analyzed participants’ responses using Mulligan et al’s privacy analytic framework. The paper discusses this in more detail, but the important thing is that participants went beyond just saying privacy and other values are important to think about. They began to grapple with specific, situated, and contextual aspects of privacy – such as considering different ways to consent to data collection, or noting different types of harms that might emerge when the same technology is used in a workplace setting compared to an intimate relationship. Privacy professionals are looking for tools to help them “look around corners,” to help understand what new types of problems related to privacy might occur in emerging technologies and contexts. This provides a potential new tool for privacy professionals in addition to many of the current top-down, checklist approaches–which assume that the concepts of privacy at play are well known in advance. Speculative design practices can be particularly useful here – not to predict the future, but in helping to open and explore the space of possibilities.

Thank you to my collaborators, our participants, and the anonymous reviewers.

Paper citation: Richmond Y. Wong, Deirdre K. Mulligan, Ellen Van Wyk, James Pierce, and John Chuang. 2017. Eliciting Values Reflections by Engaging Privacy Futures Using Design Workbooks. Proc. ACM Hum.-Comput. Interact. 1, CSCW, Article 111 (December 2017), 26 pages. DOI: https://doi.org/10.1145/3134746